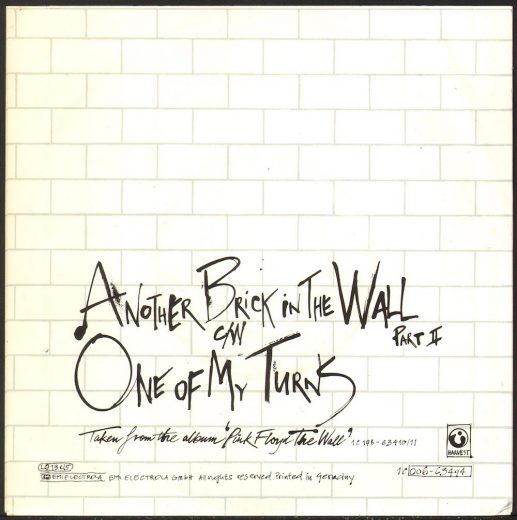

Scientists are still learning new things about the mysterious effects that music has on the brain, and a few of them just came to a new breakthrough, with the help of Pink Floyd. Genetic Engineering And Biotech News reports that researchers at UC Berkeley have figured out how to reconstruct a recognizable version of Pink Floyd's 1979 hit "Another Brick In The Wall (Part II)" by placing electrodes on the skulls of neurosurgical patients and decoding their brain activity.

This all sounds terribly dystopian, especially when there's a Pink Floyd song involved, but this is one of those rare music-based scientific studies that doesn't have anything to do with AI. Instead, this could really help people. These researchers are figuring out how noninvasive brain-machine interfaces can reconstruct the musical, emotional aspects of human speech, so that the speaking-machine interfaces for patients who are unable to speak can work better than the current robotic systems currently in place.

According to the researchers, they were able to reconstruct actual lyrics -- "all in all it was just a brick in the wall" -- from the brainwaves that they recorded. (The actual Pink Floyd lyric is "all in all, it's just another brick in the wall," but I guess that's close enough.) The words were muddy, but they could be understood, and the song's rhythms were intact. It's the first time that this has ever happened with attempts to reconstruct a song from brain recordings.

The researchers are trying to figure out what the article calls "prosody -- rhythm, stress, accent, and intonation," which carries information beyond the literal words that people say. This could be a huge boon to people who have been rendered unable to speak, because of strokes or paralysis or some other ailment. The auditory centers are deep within the brain, but now there's some hope that those brainwaves could be read through sensors on the scalp.

In the article, neurologist Robert Knight says:

It’s a wonderful result. One of the things for me about music is it has prosody and emotional content. As this whole field of brain-machine interfaces progresses, this gives you a way to add musicality to future brain implants for people who need it, someone who’s got ALS or some other disabling neurological or developmental disorder compromising speech output. It gives you an ability to decode not only the linguistic content, but some of the prosodic content of speech, some of the affect. I think that’s what we’ve really begun to crack the code on.

The article has a lot more information that I am not smart enough to understand, and you can read it here.